Euler-Lagrange Equation

A while ago, I saw the Brachistochrone Problem, which is “Find the shape of the curve down which a bead sliding from rest and accelerated by gravity will slip (without friction) from one point to another in the least time.” - Wolfram MathWorld. This problem has a cool answer than involves Calculus of Variations, specifically the Euler-Lagrange Equation which I will explain.

Motivation and Derivation

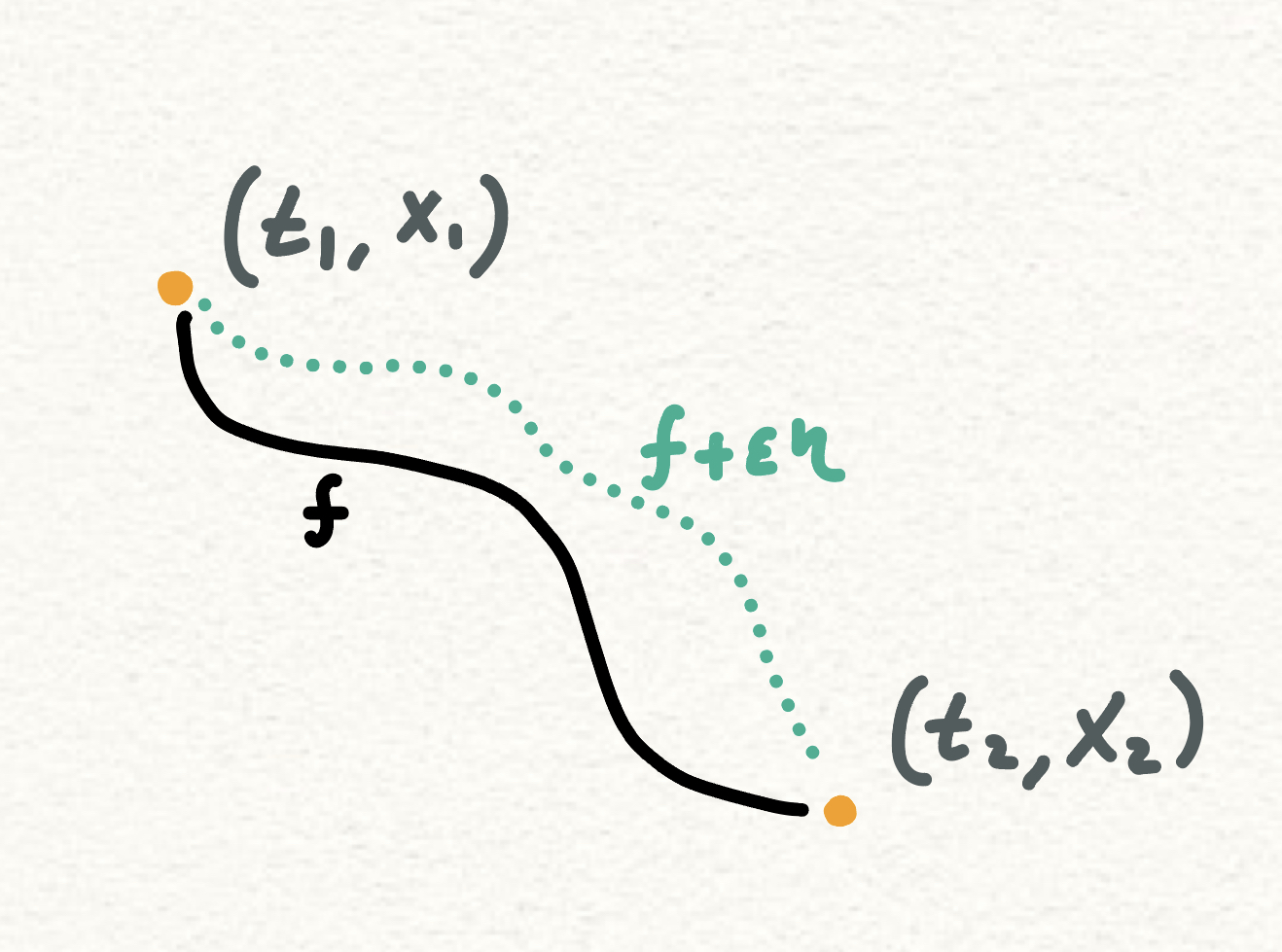

Moving away from the Brachistochrone Problem, lets look at more general case which encompasses it. We have two times, , and we have some boundary conditions:

. In addition, we have some objective function which we want to find the local/global min/max. That function is expressed as:

Here can be any smooth function, in the case of the Brachistochrone Problem it is:

So lets say is optimal, then in handy wavy terms, we want to some how take a derivative in this functional space and set it to 0. So we need to be able to describe smooth changes to .

Here, is a scalar value and , so varying it along with can explore any function with the same boundary conditions. But the main thing I was thinking about is what lead the creators to add the ? Because any function that can be captured by can be captured without the use of My current answer to this is: for we want to show that any transition to another function will result in the objective value becoming non optimal, so to smoothly describe this transition, we can’t just use , since I can’t think of any straightforward way to parametrize all perturbations, since that will require me to somehow control Instead I can let be anything and control . Another perspective is also think of it as an infinite system of equations that we solve at once, where each equation has on particular . This means that when then

This is the crux of the Euler Lagrange, the rest of the derivation I feel is more mechanical, and I copied the first and last expression from Wikipedia, you can check the missing details, but they are just regular calculus techniques.

Now we initially said that can be anything, so for the integral to equal 0, that means that the expression inside the brackets has to equal 0. You can easily make a counter argument to intuitively see why this has to be the case. Formally it is know as the Fundamental Lemme of Calculus of Variations. This yields us the Euler-Lagrange Equation:

References

https://en.wikipedia.org/wiki/Euler–Lagrange_equation

https://en.wikipedia.org/wiki/Calculus_of_variations

https://en.wikipedia.org/wiki/Fundamental_lemma_of_the_calculus_of_variations

https://mathworld.wolfram.com/BrachistochroneProblem.html