Fractals LLMS

This is the key ideas from the interesting paper

Introduction

What patterns do models potentially use in natural language. While identifying such patterns and explicitly creating metrics to measure their existence is a challenging task, there does exist a slew of works that study fractals and self-similarity metrics on long range processes. These metrics provide a lens to view whether the patterns in data are self repeating across different scales. The authors of this paper utilize these metrics to show that natural language does indeed exhibit self repeating patterns across different scales. Moreover, depending on the type of dataset, such as, math, text, code, these metrics vary which also sheds light on how different datasets have different structural patterns. For example, code has one of the highest values, indicating that the patterns in snippits of code closely resemble the macro patterns at the algorithmic scale.

Method

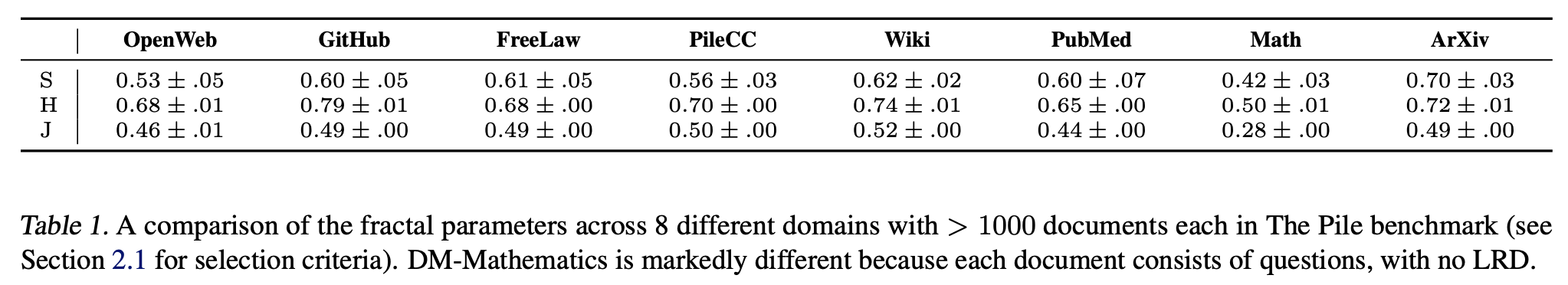

There are three main metrics the paper utilizes, self similarity, Hurst parameter, and the Joseph effect. Taking a deeper look at self similarity, which measures to what extent does the stochastic process resemble itself when dilated across space. This is formally defined as

This conveys that the shape is preserved when scaling with time if the magnitude is also accordingly scaled. A metric that the authors use is define which the probability mass event of , and approximating with . This informs us that as increases, we expect the probability of having correlation to decrease, but to what extent does is determined by . So using this we can also test how long range the dependence is. The other parameters also test other aspects of long range self similar patterns, and for conciseness I will not detail their definitions here.

Natural language is not a stochastic process. Therefore, in order to convert it to one, the authors utilize PaLM-8B trained on Google Web Trillion Word Corpus. Then for each token ,

let . Then let be the number of bits associated with the token . Then we can take a chunk of a corpus, like a paragraph, and then normalize ’s to create . Then we form the integral process Therefore, we have a method to convert tokens into an integral process, which can then be analyzed using the self similarity and long range dependence metrics.

Based on this figure we see that more structured datasets like code, legal, technical, documents yield more self similarity compared to natural text. Math is an exception because the authors state that the dataset consists of random questions. This is an interesting observation, and as a personal note I wonder how it is related to the phenomenon that LLMs trained on code reason better, for even non code tasks. Maybe there are many patterns that are extracts in high self similarity datasets, which transfer better?