Wasserstein Distances

Intro

Previously I made a post about discrete optimal transport where we walked through ways to efficiently compute approximations to distances between discrete distributions. However, what can we do when we want to calculate distances between continuous distributions? One might recall metrics like, KL, Jensen-Shannon yet those have some critical issues. Specifically, when intersection of the supports of the distributions are empty then the aforementioned metrics are ill defined. Therefore, we want to look into something called Earth Movers (EM) distance, and this is often called Wasserstein distance Distance or Kantorovich–Rubinstein metric.

Wasserstein Distance

This is just a continuous formulations of the EM distance

Unfortunately, the integrals make this intractable to compute in practical situations. Therefore, we have to look into Kantorovich-Rubinstein Duality which states

We can show why this dual formulation is useful.

Kantorovich Rubinstein Duality

Good sources for looking at the proofs are linked in the reference section.

Notes

The key aspects of the proof are when can you set , when is convex with respect to , and concave with respect to - from the linked textbook by Ambrosio et al. You can also check when we change to be

Then the resulting dual problem with equality is

This is useful as shown below.

How to optimize?

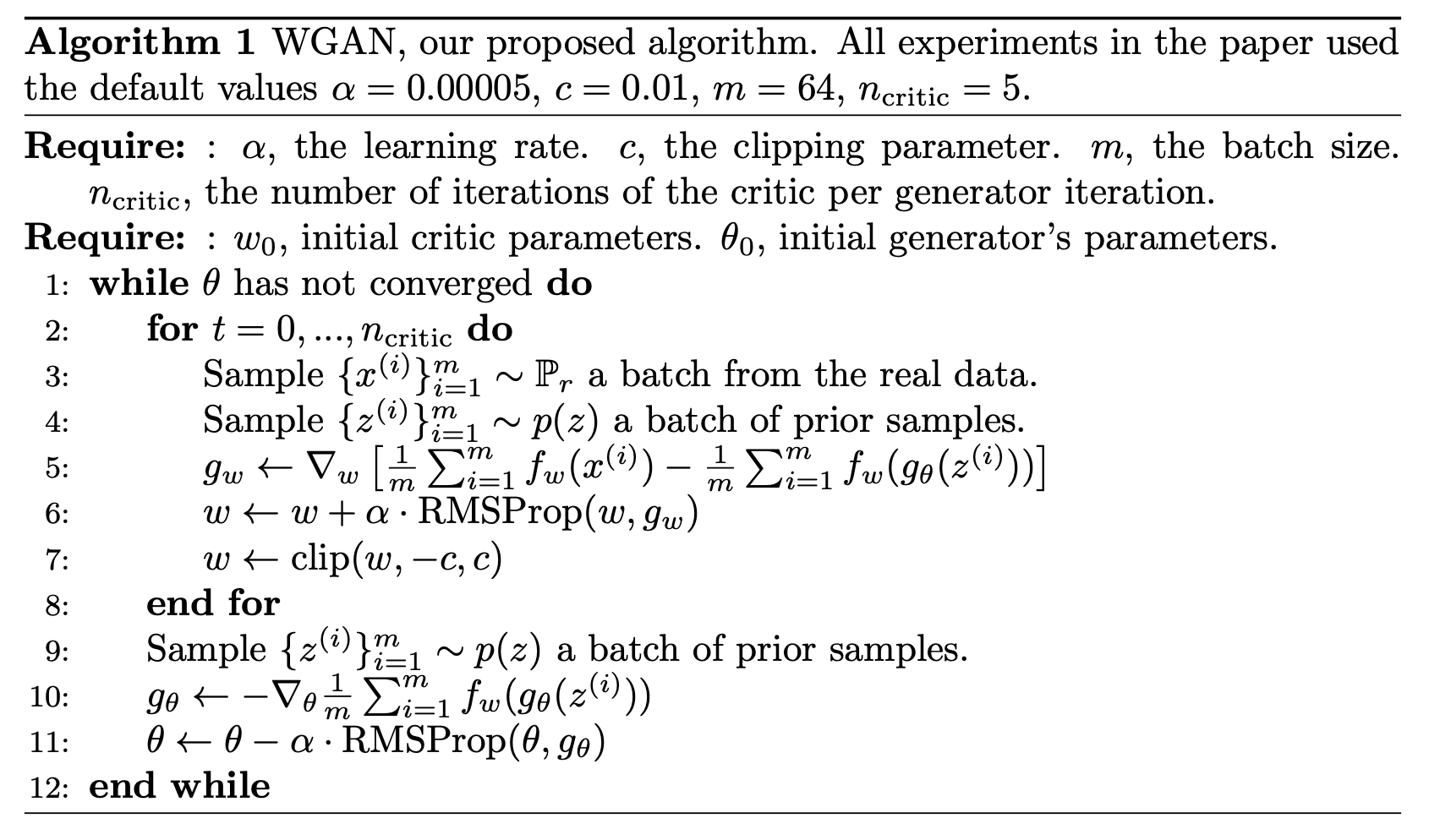

Let’s look at Wasserstein GAN paper. One thing in particular I found interesting is how they optimized over a set of K Lipschitz functions. Specifically, they clip the weights to ensure that set of weights is bounded, thus implying that the network is K Lipschitz for some . What that is doesn’t matter since recall that it just scales by . Therefore, we can use the Kantorovich Rubinstein Duality as shown in the WGAN algorithm below, where they use Monte Carlo estimator for estimating the two expectations.

References

https://vincentherrmann.github.io/blog/wasserstein/

https://courses.cs.washington.edu/courses/cse599i/20au/resources/L12_duality.pdf

https://abdulfatir.com/blog/2020/Wasserstein-Distance/

https://link.springer.com/chapter/10.1007/978-3-030-72162-6_3