Improving Recurrent Models with Group Theory

Today’s article is going to be about understanding combination of the following papers: Unlocking State-Tracking in Linear RNNS Through Negative Eigenvalues, DeltaProduct: Increasing the Expressivity of DeltaNet Through Products of Householders by Riccardo Grazzi and Julien Siems and others. I came across this paper through the ASAP Seminar.

Motivation: First to put down some notation, we will denote a recurrent model as

In the DeltaNet formulation,

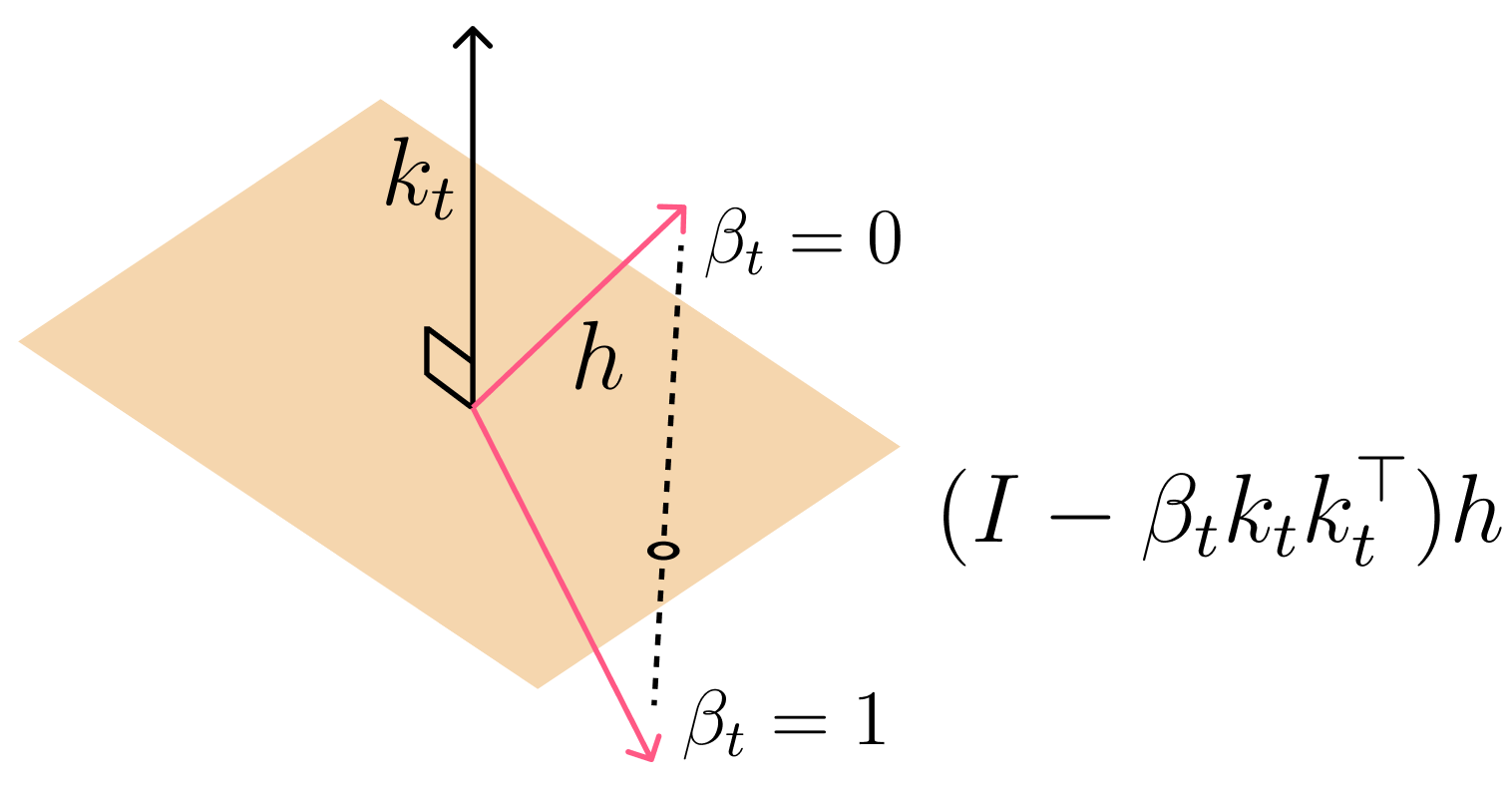

This can also be seen as 1 step gradient descent on . Importantly, since and the range of , and this means the eigen values of are bounded between . Therefore, if we modify the update to be , then this makes the eigenvalues be in the range . Interestingly, this is a householder matrix! To refresh our memory house holder transformations are reflections.

In this article, we focus on how a particular extension enables recurrent models to solve state tracking problems, especially those that arise in structured domains like group theory. To set the stage, recall that a group is a set equipped with an operation that satisfies four key properties: closure, associativity, the existence of an identity element, and the existence of inverses. A powerful result in abstract algebra is Cayley’s theorem, which states that every finite group is isomorphic to a subgroup of the symmetric group , the group of all permutations of elements. This means that, in principle, any finite group computation can be modeled using permutations.

One state tracking problem we consider involves the symmetric group . The input is a sequence of permutations and the goal is for the model to output the cumulative group element at each step:

where denotes composition of permutations, and the product is taken from right to left (i.e., is applied first). This task requires the model to maintain a hidden state that effectively encodes the current group element, updating it with each new permutation.

As a concrete example, let and , both elements of . Then the desired output after the second step is , which is the result of composing the two permutations.

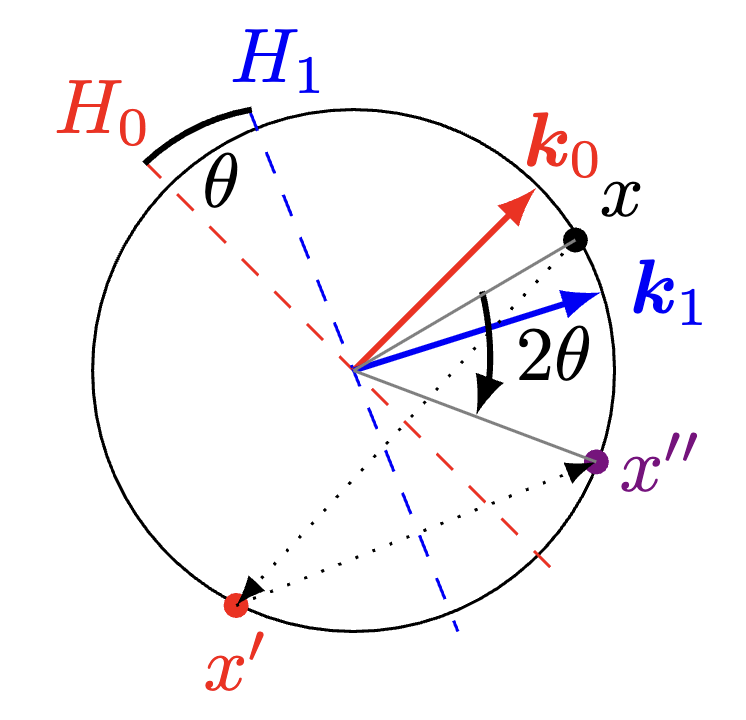

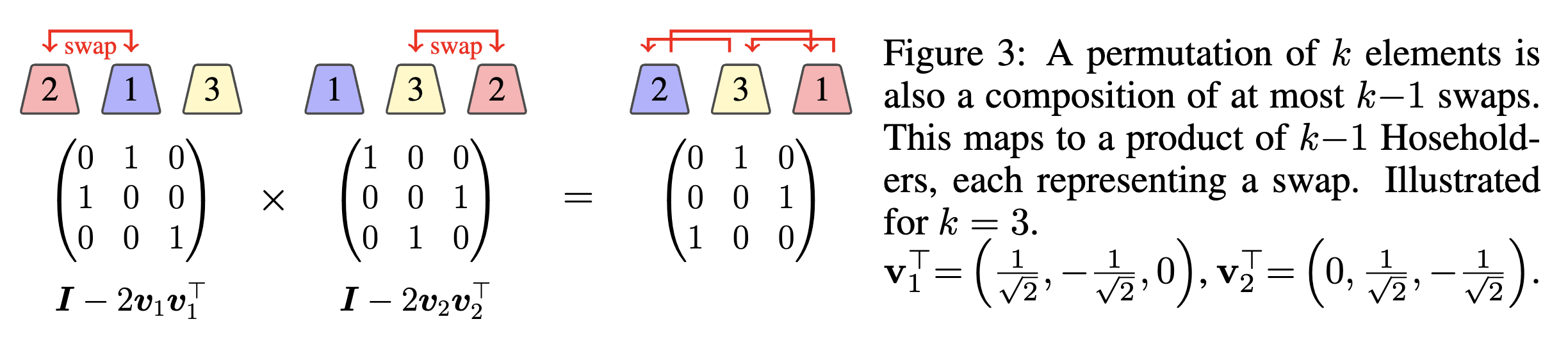

So how does this relate to Householder transformations? When permutations are viewed as transformations acting on a space, swaps can be naturally expressed using Householder reflections—a class of orthogonal transformations that reflect vectors across hyperplanes. This connection allows us to embed group operations directly into the linear dynamics of recurrent models, facilitating state tracking. The paper provides a helpful figure that visually demonstrates how permutations correspond to sequences of Householder reflections.

This also implies that if I am operating on , then I would need householder reflections. And this brings us to the DeltaProduct Formulation: For each token , generate keys, values, and step sizes (the betas):

where:

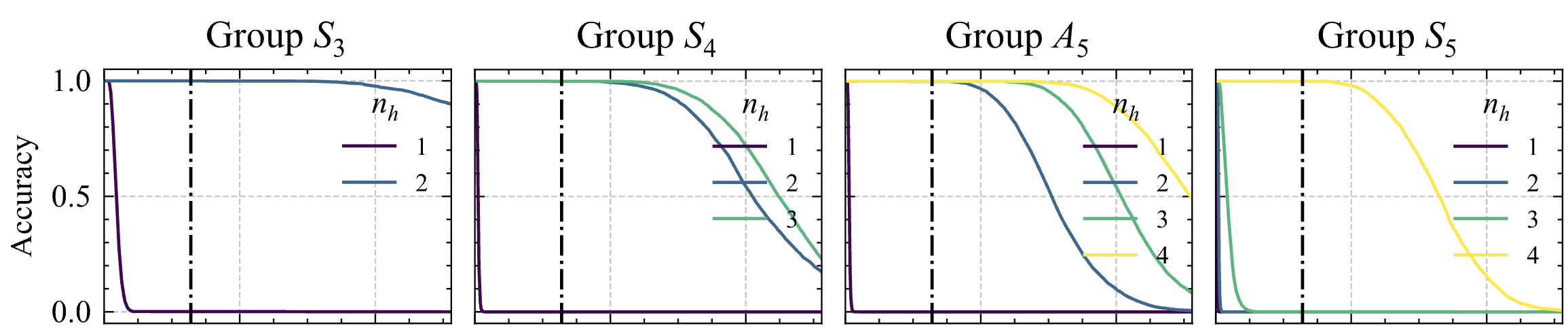

So to put in words, we are expanding our hidden state update to do updates, each step having its own dedicated weight to produce a house holder transformation. This can also be seen as doing steps of gradient descent on the associate recall objective. Now this will allow us to perform state tracking. Interestingly, there is a case where in you only need , instead of the theoretical value 3. See the following Figure 3.

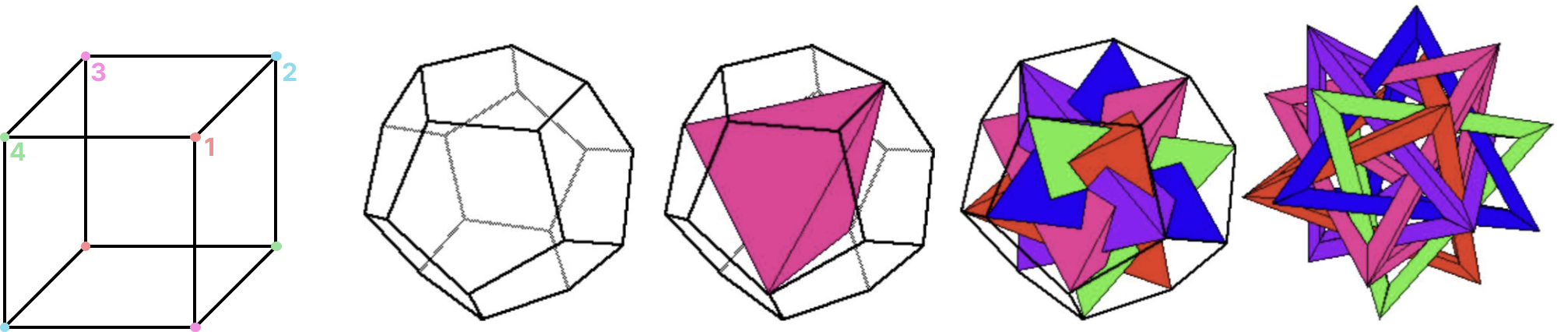

The reason is they are isomorphic to subgroups of . To see this, first consider the setting where we have an ordering of 4 elements. Interestingly, we can represent these 4 elements as the diagonals of a 3D cube. The operations on this cube are orientation preserving rotations, and you can record the ordering of the 4 diagonals post transformation as the new group element. Now, things for are a bit more involved. is the alternating group of 5 elements, the alternating group has only even permutations of the 5 elements - meaning there are an even number of swaps to create the permutations. We can actually treat each element as the position of a colored tetrahedron inside an icosahedron, and again we can rotate the icosahedron while preserving its orientation and record the position of the tetrahedrons after a rotation. See both examples below, and the icosahedron is taken from this article.

In addition, the Cartan-Dieudonné theorem, states that

that every orthogonal transformation in an n-dimensional symmetric bilinear space can be described as the composition of at most n reflections.

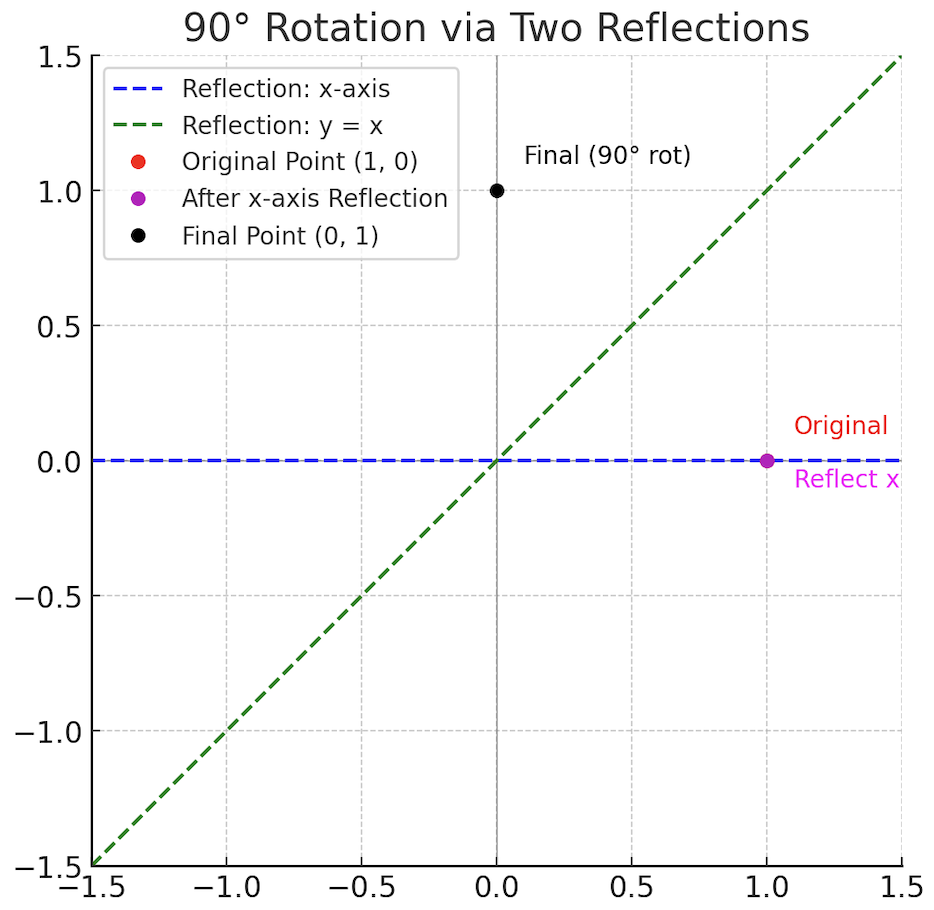

For a rotation in 3D space, we just need 2 reflections, provided that our keys are also in subspace of dimension 3. This explains why can be just 2. Here are some illustrations to explain this geometrically. The first one is from the paper, and the second was generated in Matplotlib just for myself to see an example.

Acknowledgement

Big thanks to Riccardo Grazzi, and Julien Siems for reading the article and providing really helpful feedback on adding more depth.

References:

https://en.wikipedia.org/wiki/Householder_transformation

https://en.wikipedia.org/wiki/Cartan–Dieudonné_theorem

https://en.wikipedia.org/wiki/Symmetric_group

https://arxiv.org/abs/2404.08819

https://en.wikipedia.org/wiki/Alternating_group